RFO

Prepared by Marek Isalski, CTO at FAELIX, on 2025-01-24.

Executive Summary

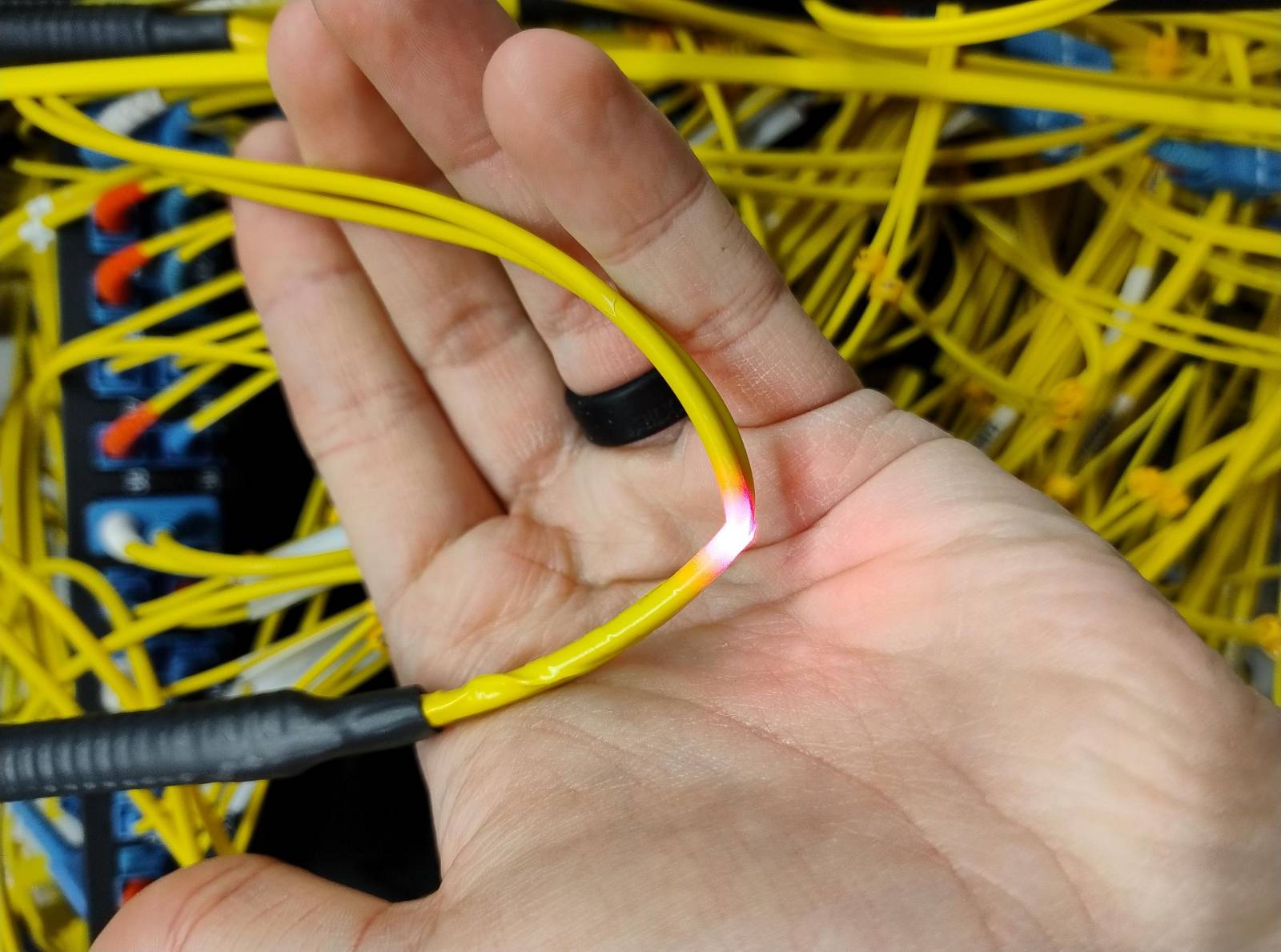

Around the middle of the daytime of 16th January, an optical fibre trunk between Telehouse West (our point of presence we call "THW") and DigitalRealtyTrust LON2 (our "IXN" POP) suffered damage. Initially this caused a complete loss of light, but then light levels crept back up for a time with the signal hovering around the minimum acceptable level for our optics, before dropping back down to an unmeasurably low level. This caused instability in the switch-to-switch connectivity, but also high CPU load as the link flapped up and down, both of which contributed to a period of very high packet loss. We brought the link out of service, and during testing that evening our fibre provider found no issues with the underlying dark fibre segment. We returned the fibre to service, but the following day saw a recurrence at around the same time. The dark fibre provider found damage to the connection from Faelix within the fibre provider's ODF during their visit to THW, and so they replaced this. The fibre provider has not been able to ascertain how that damage occurred, in spite of contacting the site owners for engineer access logs.

Background

Faelix has network capacity on a dark fibre "ring" between THW, Telehouse North (THN) and IXN. A switch at each site connects to a switch at each other site via a LACP link, each member of the LACP pair taking one direction around the ring over DWDM.

During this incident the light level between THW and IXN dropped, causing one of the LACP members of links traversing that path to drop out of the aggregating link. This causes a small amount of CPU usage on the ring switches affected as they recompute paths. Also during this recomputation the CPU usage increases as more packets are steered via the CPU ("slow path") rather than via the switch ASIC ("fast path"). However, due to the nature of the damage to the fibre, we saw the link flap up and down many times, which caused a prolonged period of high CPU usage and slow path traffic. This resulted in a large amount of packet loss, but also a backlog of computation caused by the flapping, which we could only resolve on one of the switches in THW by rebooting it.

Further Actions

Bringing the affected path back into service on 2025-01-26.

During this incident we identified that our ring switches perform poorly when the site-to-site connectivity flaps a large number of times, which can occur when light levels are just on the cusp of viable transmission.

We will perform lab testing to see whether an updated software version for these switches resolves this performance problem, or whether we need to plan for a technology replacement across the three sites.

Incident Timeline

This is shown in full on our status page for the incident but the key times are:

2025-01-16 11:22:51 — initial alerts for switch ports down

2025-01-16 11:26 — incident identified and status page updated

2025-01-16 11:29:29 — another round of link flapping

2025-01-16 11:30:26 – 11:38:12 — transmission levels on the margin of viability causing link up/down flapping (198 link state changes) and very high CPU usage, too high to even keep up with logging these events on some devices

2025-01-16 11:36 — ticket raised with dark fibre provider

2025-01-16 11:38 — decision to reboot switch in THW

2025-01-16 11:45 — switch finally responds to reboot command

2025-01-16 11:47 — switch completes reboot

2025-01-16 11:49:01 — dark fibre provider acknowledges ticket

2025-01-16 13:48:42 – 13:49:57 — more flapping light levels (but circuit is now taken down)

2025-01-16 14:33 — dark fibre provider assigns ticket to field engineering team

2025-01-16 18:36:17 – 19:18:27 — dark fibre provider's engineering team begins intrusive testing

2025-01-16 19:30:00 — we reject the fix by the dark fibre provider as light levels are too low (an additional 4dBm of loss is observed on one of the two fibres in the duplex pair)

2025-01-16 19:36:25 – 19:57:08 — dark fibre provider's engineering team continues tests

2025-01-16 19:52 — we report back that light levels are back to normal, and the dark fibre provider's engineer leaves site

2025-01-16 04:04 — light levels stable for the last approximately 8 hours, circuit brought back into service

2025-01-17 11:51:12 – 11:51:33 — light level drops too low to establish connectivity on the THW-IXN path again

2025-01-17 11:51:57 – 11:52:39 — recurrence of light level loss, CPU usage on switches again very high, decision to take path out of service

2025-01-17 11:56 — ticket re-opened with dark fibre provider

2025-01-17 11:57:44 – 11:58:15 — recurrence of light level loss

2025-01-17 11:58:49 – 11:59:51 — recurrence of light level loss

2025-01-17 12:20 — escalated with dark fibre provider

2025-01-17 12:33 — dark fibre provider begins intrusive testing at THW

2025-01-17 16:14 — dark fibre provider begins intrusive testing at IXN

2025-01-20 14:55 — dark fibre provider states that a damaged patch in THW was replaced on the evening of 16th

2025-01-23 17:11 — the dark fibre provider sends through this picture of the damaged patch cable (cross-connect) taken from within their ODF in THW

- 2025-01-26 04:00 — planned window to bring the affected fibre path back into service